While I have to admit that I'm using Zabbix since the 1.8.x era, I also have to admit that I'm not an expert, and that one can learn new things every day. I recently had to implement a new template for a custom service, that is multi-instances aware, and so can be started multiple times with various configurations, and so with its own set of settings, like tcp port on which to listen, etc .. , but also the number of instances running as it can be different from one node to the next one.

I was thinking about the best way to implement this through Zabbix, and my initial idea was to just have one template per possible instance type, that would though use macros defined at the host level, to know which port to check, etc .. so in fact backporting into zabbix what configuration management (Ansible in our case) already has to know to deploy such app instance.

But parallel to that, I always liked the fact that Zabbix itself has some internal tools to auto-discover items and so triggers for those : That's called Low-level Discovery (LLD in short).

By default, if you use (or have modified) some zabbix templates, you can see those in actions for the mounted filesystems or even the present network interfaces in your linux OS. That's the "magic" : you added a new mount point or a new interface ? Zabbix discovers it automatically and start monitoring it, and also graph values for those.

So back to our monitoring problem and the need for multiple templates : what if we could use LLD too and so have Zabbix automatically checking our deployed instances (multiple ones) automatically ? The good is that we can : one can create custom LLD rules and so it would work OOTB when only one template would be added for those nodes.

If you read the link above for custom LLD rule, you'll see some examples about a script being called at the agent level, from the zabbix server, at periodic interval, to "discover" those custom discovery checks. The interesting part to notice is that it's a json that is returned to zabbix server , pointing to a new key, that is declared at the template level.

So it (usually) goes like this :

- create a template

- create a new discovery rule, give it a name and a key (and also eventually add Filters)

- deploy a new UserParameter at the agent level reporting to that key the json string it needs to declare to zabbix server

- Zabbix server receives/parses that json and based on the checks/variables declared in that json, it will create , based on those returned macros, some Item Prototypes, Trigger prototypes and so on ...

Magic! ... except that in my specific case, for some reasons I never allowed the zabbix user to really launch commands, due to limited rights and also the Selinux context in which it's running (for interested people, it's running in the zabbix_agent_t context)

I suddenly didn't want to change that base rule for our deployments, but the good news is that you don't have to use UserParameter for LLD ! . It's true that if you look at the existing Discovery Rules for "Network interface discovery", you'll see the key net.if.discovery, that is used for everything after, but the Type is "Zabbix agent". We can use something else in that list, like we already do for a "normal" check

I'm already (ab)using the Trapper item type for a lot of hardware checks : reason is simple : as zabbix user is limited (and I don't want to grant more rights for it), I have some scripts checking for hardware raid controllers (if any), etc, and reporting back to zabbix through zabbix_sender.

Let's use the same logic for the json string to be returned to Zabbix server for LLD. (as yes, Trapper is in the list for the discovery rule Type.

It's even easier for us, as we'll control that through Ansible : It's what is already used to deploy/configure our RepoSpanner instances so we have all the logic there.

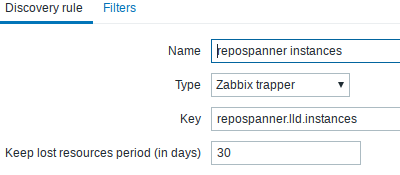

Let's first start by creating the new template for repospanner, and create a discovery rule (detecting each instances and settings) :

You can then apply that template to host[s] and wait ... but first we need to report back from agent to server which instances are deployed/running. So let's see how to implement that through ansible.

To keep it short, in Ansible we have the following (default values, not the correct ones) variables (from roles/repospanner/default.yml):

...

repospanner_instances:

- name: default

admin_cli: False

admin_ca_cert:

admin_cert:

admin_key:

rpc_port: 8443

rpc_allow_from:

- 127.0.0.1

http_port: 8444

http_allow_from:

- 127.0.0.1

tls_ca_cert: ca.crt

tls_cert: nodea.regiona.crt

tls_key: nodea.regiona.key

my_cn: localhost.localdomain

master_node : nodea.regiona.domain.com # to know how to join a cluster for other nodes

init_node: True # To be declared only on the first node

...

That simple example has only one instance, but you can easily see how to have multiple ones, etc So here is the logic : let's have ansible, when configuring the node, create the file that will be used zabbix_sender (triggered by ansible itself) to send the json to zabbix server. zabbix_sender can use a file that is separated (man page) like this :

- hostname (or '-' to use name configured in zabbix_agentd.conf)

- key

- value

Those three fields have to be separated by one space only, and important : you can't have extra empty line (but something can you easily see when playing with this the first time)

How does our file (roles/repospanner/templates/zabbix-repospanner-lld.j2) look like ? :

- repospanner.lld.instances { "data": [ {% for instance in repospanner_instances -%} { "{{ '{#INSTANCE}' }}": "{{ instance.name }}", "{{ '{#RPCPORT}' }}": "{{ instance.rpc_port }}", "{{ '{#HTTPPORT}' }}": "{{ instance.http_port }}" } {%- if not loop.last -%},{% endif %} {% endfor %} ] }

If you have already used jinja2 templates for Ansible, it's quite easy to understand. But I have to admit that I had troubles with the {#INSTANCE} one : that one isn't an ansible variable, but rather a fixed name for the macro that we'll send to zabbix (and so reused as macro everywhere). But ansible, when trying to translate the jinja2 template, was complaining about missing "comment' : Indeed {# ... #} is a comment in jinja2. So the best way (thanks to people in #ansible for that trick) is to include it in {{ }} brackets but then escape it so that it would be rendered as {#INSTANCE} (nice to know if you have to do that too ....)

The rest is trival : excerpt from monitoring.yml (included in that repospanner role) :

- name: Distributing zabbix repospanner check file

template:

src: "{{ item }}.j2"

dest: "/usr/lib/zabbix/{{ item }}"

mode: 0755

with_items:

- zabbix-repospanner-check

- zabbix-repospanner-lld

register: zabbix_templates

tags:

- templates

- name: Launching LLD to announce to zabbix

shell: /bin/zabbix_sender -c /etc/zabbix/zabbix_agentd.conf -i /usr/lib/zabbix/zabbix-repospanner-lld

when: zabbix_templates is changed

And this is how is rendered on one of my test node :

- repospanner.lld.instances { "data": [ { "{#INSTANCE}": "namespace_rpms", "{#RPCPORT}": "8443", "{#HTTPPORT}": "8444" }, { "{#INSTANCE}": "namespace_centos", "{#RPCPORT}": "8445", "{#HTTPPORT}": "8446" } ] }

As ansible auto-announces/push that back to zabbix, zabbix server can automatically start creating (through LLD, based on the item prototypes) some checks and triggers/graphs and so start monitoring each newly instance. You want to add a third one ? (we have two in our case) : ansible pushes the config, would modify the .j2 template and would notify zabbix server. etc, etc ...

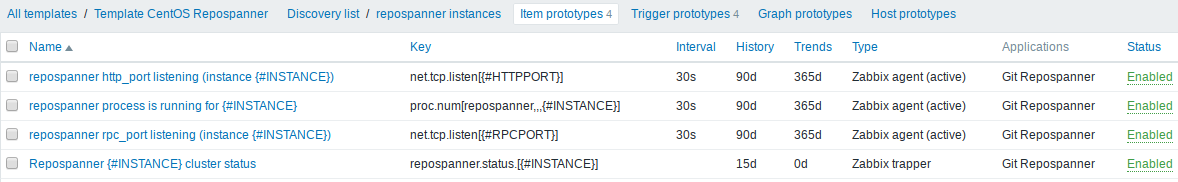

The rest is just "normal" operation for zabbix : you can create items/trigger prototypes and just use those special Macros coming from LLD :

It was worth spending some time in the LLD doc and in #zabbix to discuss LLD, but once you see the added value, and that you can automatically configure it through Ansible, one can see how powerful it can be.